Since the dawn of civilisation, humans have questioned the workings of the natural world around them and their own place in the Universe. Through a long process of investigation over millennia, mankind has built up an understanding of Nature and the wider cosmos.

Each successive generation has expanded the horizon of our knowledge and in the process extended the boundary of the known Universe. From Ptolemy and Copernicus and through to the modern day, at every stage scientific discoveries have refined and redefined our picture of the Cosmos and our place within it.

But this journey of discovery, as with all fields of science, has not been a smooth ascent from lower to higher planes of knowledge. Rather, the process develops in a dialectical manner: in each case, an accumulation of evidence builds up that is in contradiction with the established theory; a radical change in outlook is needed to square the circle and continue taking understanding forward; and with gradual improvements in our models, we pave the way for qualitative theoretical changes, which in turn allow for further advances.

Such qualitative leaps, meanwhile, are rarely easy, but require a dramatic and revolutionary break with the established scientific paradigm, which is frequently backed up by the weight of past prejudices and the conservative interests of the status quo. Thus it was with the Copernican and Galilean revolution, which challenged the old geocentric view of the world – championed and defended vehemently by the Church – that placed the Earth at the centre of the solar system.

Now, in the 21st century, standing on the shoulders of giants such as Einstein and many others, we are able to see further than ever before. Thanks to the research of previous generations, we have developed an extraordinary understanding of the Universe and its laws – from the accurate predictions provided at the atomic and sub-atomic level by quantum mechanics, to the theories of special and general relativity and their explanations of gravity, motion, space and time.

For many years now, however, storm clouds have been amassing on the horizon. There has been an accumulation of evidence and inconsistencies that bring the current cosmic models into question. Deep and fundamental problems with the existing theories remain unanswered and years of research into new ideas have led nowhere. In short, modern cosmology is in crisis.

What do we know?

The current cosmological theories can be broadly divided in two – and then in two again. At the atomic and sub-atomic scale we have quantum mechanics and the Standard Model of Particle Physics (SMPP). At the scale of stars and galaxies – and even larger – we have Einstein’s theories of general relativity and the Standard Model of Big Bang Cosmology (SMBBC).

The SMPP describes the veritable zoo of particles that are said to be the “fundamental building blocks” of matter, consisting of small particles called leptons, such as the electron and neutrinos and a variety of larger particles called quarks, which make up protons and neutrons. In addition, the SMPP explains the behaviour of three of the four forces of nature: the electromagnetic force (electromagnetism, including light and magnetic repulsion and attraction); the weak nuclear force, (responsible for radioactive decay) and the strong nuclear force (which binds protons and neutrons). The fourth force is gravity, which causes all matter to be mutually attracted; this is significantly weaker than the other three, but operates on a vast scale and is not included in the SMPP, but is explained instead by general relativity.

The three forces within the SMPP are said to be transmitted between particles of matter by bosons – force-carrying particles – such as the photon, which carries the electromagnetic force. Furthermore, the SMPP explains that all matter has the property of mass because of its interaction with the Higgs field, via the Higgs boson – the so-called “God particle” the discovery of which was announced by scientists initially in July 2012, with later confirmation in March 2013. This was after a 40-year search, which included the construction of the Large Hadron Collider.

Quantum mechanics aims to describe the behaviour of the particles covered by the SMPP. In particular, quantum mechanics attempts to explain how such particles can be considered to behave like both particles and like waves. Light, for example, long considered an electromagnetic wave, was shown by Einstein in 1905 to be composed of massless particles, photons, with discrete values of energy proportional to the frequency of the wave. Vice-versa, the famous “double-slit experiment” showed that a stream of quantum particles, when fired at a sheet with two slits in it, would produce a pattern on photographic film normally associated with the interference produced by interacting waves.

In the quantum world, the mechanical notions of Newton’s laws of motion are replaced with probabilities. According to certain interpretations – such as that of the “Copenhagen school” – the properties of particles do not exist objectively, i.e. independently of the subjective observer, but are determined by the very act of measurement and observation itself. Particles appear and disappear; they both exist and do not exist at the same time. In place of predictability, quantum mechanics introduces only uncertainty. Where we once had cause-and-effect, suddenly we find ourselves plunged into randomness.

At the other end of the scale we have Einstein’s theory of special relativity, which explains the relative nature of space and time; that is, the way in which space curves and time slows down for matter as it approaches the speed of light, which (in a vacuum) is constant, usually denoted as c. Einstein’s theories include the important assumption that nothing in the Universe can travel faster than the speed of light.

General relativity, meanwhile, explains the gravitational force in terms of the interaction between matter and the notion of space-time. Space-time is a joint fabric of the three spatial dimensions and the dimension of time that is curved under the influence of matter. According to general relativity, the curvature of space-time, which is caused by matter, in turn affects the motion of matter. Thus we see a dynamic interaction between matter and space-time, in which one conditions the other, and from which the force of gravity emerges.

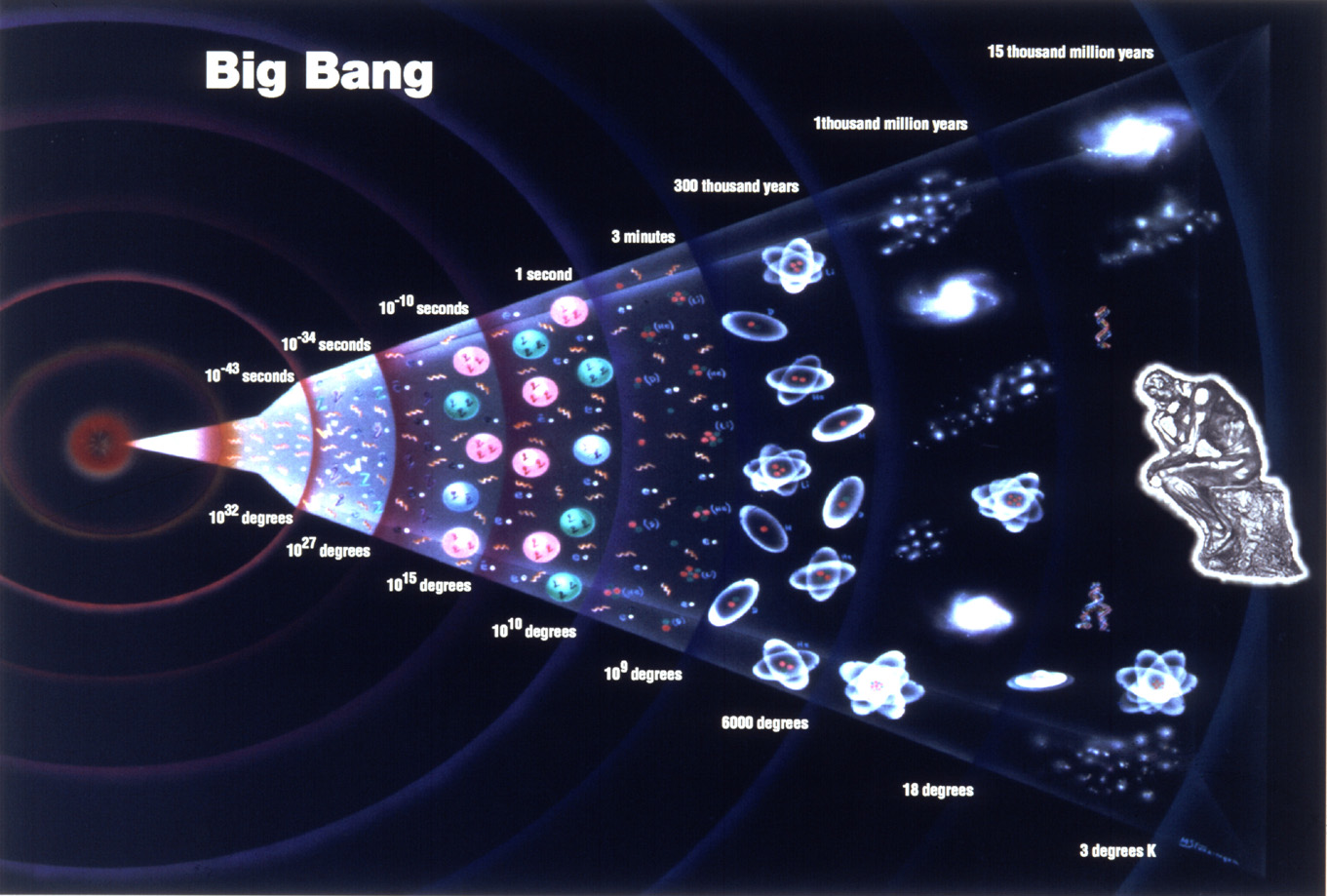

Finally, we have the Standard Model of Big Bang Cosmology (SMBBC), which ultimately attempts to explain the nature of the Universe as a whole, including its origins and its history. The fundamental basis of the SMBBC is the idea that the Universe has a beginning, and before this beginning there was nothing: neither space nor time existed. Up until 1917, when Einstein tried to apply the equations of general relativity to the Universe as a whole, the prevailing scientific opinion was that the Universe was static and eternal. Einstein’s calculations, however, showed that the Universe is dynamic; his conclusion was that the mutual force of gravity between matter would cause instability, with the Universe ultimately collapsing in on itself.

In 1931, observations by the American astronomer Edward Hubble provided evidence which suggested that galaxies, far from collapsing in, are in fact moving away from one another. The conclusion drawn from these observations was that, if everything is moving away from everything else, there must have been a point in time and space when everything was together; a point of origin for the entire Universe. This “origin” event was coined the “Big Bang”, a term first used disparagingly by the English astronomer Fred Hoyle to describe this cosmological creationism.

Together these modern theories – the SMPP, quantum mechanics, special and general relativity, and the SMBBC – form the current cosmological models used to describe the fundamental laws of the Universe. For the best part of a century, attempts have been made by theoretical physicists, including Einstein and his contemporaries, to combine all four natural forces into a single “Theory of Everything”, but to no avail. And, as quickly becomes apparent upon further description and investigation, rather than explaining the fundamentals laws, these models are themselves full of contradictions and fundamental flaws.

Dark matter and dark energy

It seems that the ability of the current models and theories to explain the observations and evidence at our disposal has reached its limit. From the small, sub-atomic scale to the cosmologically large, contradictions have emerged at every turn.

Starting backwards with the SMBBC, we immediately encounter problems that are, quite literally, massive. To be specific: where is all the mass? From measurements of the speeds of astronomically large objects, such as galaxies and stars, and the strength of the gravitational effects needed for such speeds, it has been consistently inferred that the vast majority of the mass in the Universe appears to be “missing”. Estimates for this apparently non-existent matter are astonishingly high, with 90% of the mass needed for observations to make sense (based on current theories) apparently missing. This is not exactly a small statistical error!

The term “dark matter” is now commonly used to describe this missing mass. To account for this, scientists have begun a search for “WIMPs”, or weakly interacting massive particles; that is, for a type of matter that is hard to see or detect, but which has powerful gravitational effects. Up to now, the search for possible WIMP candidates has proved elusive and none of the standard cosmological theories shed any light on where this enormous quantity of so-called dark matter may be hiding.

Next on the list of glaring problems is “dark energy”. Despite its similar name to dark matter, this is an entirely different problem within the SMBBC, and is related to the question of the expanding Universe. In the late 1990s, further astronomical observations indicated that not only are galaxies all moving away from one-another, but that the speed of this expansion is actual increasing. The galaxies are, it seems, accelerating away from one-another. It is understood in all standard physics that acceleration only comes about when a force is applied. The implication of accelerating galaxies, therefore, is that there must be a force, opposite to and greater than the mutual attraction of gravity, and that this force is accelerating matter apart in all directions.

Einstein had originally introduced this idea with his famous “cosmological constant” – effectively an arbitrary number introduced to fudge his equations to create a Universe that was held in a steady and stable equilibrium, rather than collapsing in on itself due to the effects of gravity. Einstein later admitted that this was indeed simply a fudge, with no empirical or sound theoretical evidence behind it, and he described the cosmological constant as “the biggest blunder of his life.”

With the latest observations, however, the cosmological constant was reintroduced in the form of “dark energy” – energy associated with empty space that would act to push matter apart. But again, this is no small error: the latest calculation indicate that dark energy should account for 73% of all mass-energy in the Universe; dark matter should make up a further 22% – leaving the actual physical matter and radiation that we can actually detect to account for only 5% of all mass-energy of what observation suggests there must be![1]

To give a name to phenomena, however, does not explain them. Frederick Engels, with Karl Marx, was one of the founders of scientific socialism and when arguing against those scientists who were satisfied with simply attaching the label “force” as an explanation to cover any gap in their knowledge, he commented,

“Just because we are not yet clear about the ‘rather complicated conditions’ of these phenomena, we often take refuge here in the word force. We express thereby not our knowledge, but out lack of knowledge of the nature of the law and its mode of action.”[2]

Just as Engels polemicised against nineteenth century scientists, in the twentieth century we can justifiably criticise those scientists who are happy with covering up gaping holes in the their theories with phrases like “dark matter” and “dark energy”.

The Big Bang

An even more fundamental issue in relation to the Standard Model of Big Bang Cosmology is the question of the Big Bang itself. The main evidence for the theory of a “Big Bang”, an event in time and space when all matter in the Universe was concentrated at a single point, is the observation that astronomical objects, such as galaxies, are moving away from one-another, implying a common point from which this motion began.

This concept of a “Big Bang”, involving a single concentrated point of all matter – known as a singularity – throws up a whole host of problems for cosmologists that are as yet unresolved. The first is that in such a theoretical singularity the density of matter would be infinite, at which point all the known laws of physics would break down. Secondly, the question arises as to where the energy for such an almighty explosion would come from? Some have suggested that the Big Bang, and the resultant creation of the Universe, was simply the produce of a “quantum fluctuation”, that is, a random disturbance in space-time. But if nothing – no matter, energy, motion, space, or time – existed before the Big Bang, how could there be any physical laws – including quantum mechanics – that would have any meaning? A “quantum fluctuation” of what, from what and inside of what?

Finally, and most importantly, on a related note to the above: what was said to have existed before the Big Bang? Some suggest that this moment was the point of creation for the entire Universe; the original source of all motion; the prime mover. So what came before? Some modern theories suggest there was a Universe of matter in pure stasis – that is, a Universe with no motion. What then set the Universe in motion? What force – external to the Universe – could provide this leap from stasis to dynamism? As Engels explains in his polemics against Dühring, in order to suggest such a leap from the static to the dynamic, one must ultimately resort to God:

“If the world had ever been in a state in which no change whatever was taking place, how could it pass from this state to alternation? The absolutely unchanging, especially when it has been in this state from eternity, cannot possibly get out of such a state by itself and pass over into a state of motion and change. An initial impulse must therefore have come from outside, from outside the Universe, an impulse which set it in motion. But as everyone knows, the “initial impulse” is only another expression for God.”[3]

The idea that, rather than a leap from pure stasis to dynamism, there was perhaps nothing at all and then something – i.e. the creation of the entire Universe from nothing, including all matter and energy – is equally as absurd and amounts to the same thing. As Engels continues:

“Motion is the mode of existence of matter. Never anywhere has there been matter without motion, nor can there be…Matter without motion is just as inconceivable as motion without matter. Motion is therefore as uncreatable and indestructible as matter itself…the quantity of motion existing in the world is always the same. Motion therefore cannot be created; it can only be transferred.”[4]

From nothing can come precisely nothing – this is a fundamental tenet of physics and of dialectical materialism, and it is expressed by the scientific law of the conservation of energy: energy can neither be created nor destroyed. Such talk of the “beginning of time”, therefore, is pure nonsense. And yet this – this modern day creation myth – is the dominant paradigm within the SMBBC.

Time and space

As explained above, the current SMBBC model is based on the idea of the Big Bang at a moment when all the matter of the Universe had been supposedly compressed into a single, infinitesimally small point. After this initial creation, according to the model, the Universe underwent a rapid expansion known as inflation.

Recent widely-reported observations have been cited as evidence in support of this inflationary model of the early Universe. It should be noted, however, that, whilst the inflation theory helps to answer several empirically observed inconsistencies, in its current form the theory still generates a whole new set of questions and problems. For example, what is the cause of this inflation? And where does the energy for such a rapid expansion come from?

The main contender for the answer to these questions is the purely speculative hypothesis of a new particle, the inflaton, which is said to drive the process of inflation. But such an answer is, in reality, not an answer at all. As with so many other areas of modern cosmology, such as the examples of WIMPs and dark matter, the theoretical physicists have simply tried to explain a phenomenon by assigning it a new, never-before-existed particle. Such an “explanation” does nothing but push the problem back by one step. One must now ask: what are the properties of the inflaton particle? How do these properties arise? And why do these properties cause the process of inflation?

Most importantly, however, such inflationary theories – whether true or false – do not help to overcome the main contradiction in the Big Bang model: the fact that you cannot have a “beginning of time” which marks the creation of something from nothing.

Einstein’s initial preference was for a “steady-state” Universe – one of fixed size that had no beginning and no end. Following the Hubble observations that galactic objects were moving away from one-another, Einstein proposed an “oscillating” or “cyclic” Universe to allow for the idea of a Big Bang, but without having to resort to the idea of a “beginning of time”. In the cyclic Universe, there is a perpetual cycle of expansion and contraction, leading to a series of Big Bangs and so-called Big Crunches.

A modern version of the cyclic Universe has been proposed by the proponents of M-theory, an extension of string theory, which is an attempt to create a cosmological “Theory of Everything”. This M-theory cyclic Universe is based on the idea that our Universe exists as a four-dimensional membrane – or simply “brane” – within another higher dimensional space containing other “branes”. These branes oscillate and at certain points meet. Such collisions, which occur cyclically, lead to an enormous release of energy and creation of matter, which from the perspective of the observer on the brane gives the impression of a Big Bang.

Unfortunately for the proponents of this cyclical “brane” theory, there is no actual observable evidence or proof for such a hypothesis, nor can there ever be. Such “science” is mere conjecture, based, like so much in modern cosmology, on nothing but mathematical constructions – equations that are increasingly abstracted and divorced from all reality.

All of these theories – whether it is the standard SMBBC model, the steady-state Universe, or the cyclic Universe – suffer from a similar problem, in that they envisage a closed, finite Universe, a bounded space that exists with nothing outside of it. But how can there be a boundary to the Universe? What is beyond this boundary? Nothing? To talk of an “edge of the Universe” is as nonsensical as to talk of the beginning of time.

Any proposal of a finite, bounded Universe raises within itself the idea of something beyond this boundary, which in turn demonstrates the absurdity of placing limits to the Universe. As Hegel, the great German dialectician, remarked in his Science of Logic, “It is the very nature of the finite to transcend itself, to negate its negation and to become infinite.”[5]

To overcome the absurdity of the Universe having an “edge”, the analogy of a balloon’s surface is often used: the three-dimensional space of our Universe is, apparently, like the two-dimensional surface of a balloon – something that is finite, but which has no boundary. Like the balloon, the analogy follows, our bounded Universe is able to expand (or contract) without the need for any “edge”.

The balloon analogy, however, actually demonstrates the absurdity that the analogy aims to overcome, for the idea of a balloon’s curved surface expanding only makes sense if there is a third dimension – at right-angles (i.e. perpendicular) to the balloon’s surface – for the expansion to move in to. The surface of the balloon is itself a boundary. Similarly, if the Universe is thought to be finite but unbounded, any expansion requires there to be something outside of the Universe into which the expansion to take place. Such a concept of the Universe makes no sense. The Universe – by definition – means everything that exists. If there is something beyond the boundary, some unoccupied space or potential space, then this too is part of the Universe.

Furthermore, such concepts of a finite Universe are vague about what it is that is finite. The “Universe” is not a thing in itself, but a name for the collection of all things; a word for everything that exists – i.e. for all physical matter. A finite Universe, therefore, means a finite amount of matter, which again implies an “edge of the Universe”, beyond which no matter exists.

The whole history of science has been one in which the limits of the known Universe have constantly been expanded. Once we believed that the Earth was the centre of the Universe, with nothing above but the heavens. With the advance of more and more powerful telescopes, we have been able to look further into space, finding other planets, stars, and galaxies in the process. The further we look, the more we find. Where once there was scepticism about the existence of other planets, now hundreds have been found by sophisticated telescopic measurements, including apparently Earth-like planets hundreds of light-years away. Yet all talk of a finite Universe – with a beginning of time and a boundary in space – erects a barrier to what can be known, a mystical wall separating us from that which is apparently beyond the realms of science.

The problem for many, it seems, in terms of proposing both the beginning of time and a boundary to space, is the question of infinity. Mathematicians have sought to banish infinity at every step, considering it a seemingly unfathomable and impossible concept. But mathematics is only an abstraction – an approximation of an infinitely complex reality that can never be fully captured by any equation, model, or law.

To talk of a “beginning of time” is as absurd as to talk of an “end of time”, for, as Engels explains, to conceive of an infinity with a beginning and no end is the same as to imagine an infinity with an end but no beginning:

“It is clear that an infinity which has an end but no beginning is neither more nor less infinite than that which has a beginning but no end.”[6]

The Universe can only be understood as a dialectical unity of opposites: an infinity of finite matter that is itself infinitely divisible and transformable. That is to say, there is an infinite amount of matter – matter that is itself finite in size and endlessly changing. All attempts to banish this infinity from cosmology have only led to even greater riddles and confusion, to talk of “singularities” where all the laws of physics break down. But a singularity is nothing but a theoretically infinitesimally small point, which, in turn is simply an inverted infinity. Far from removing infinity from the Universe, therefore, the cosmologists have merely re-introduced it by the back door.

All attempts to remove the contradiction of infinity from our explanations of the Universe, therefore, merely serve to create new insoluble contradictions elsewhere, as Engels explained:

“Infinity is a contradiction, and is full of contradictions. From the outset it is a contradiction that an infinity is composed of nothing but finites, and yet this is the case. The limitedness of the material world leads no less to contradictions than its unlimitedness, and every attempt to get over these contradictions leads, as we have seen, to new and worse contradictions. It is just because infinity is a contradiction that it is an infinite process, unrolling endlessly in time and in space. The removal of the contradiction would be the end of infinity.”[7]

The infinity of the Universe is an objective reality that cannot be wished away by mathematical trickery. This reality of the Universe lies in the unity of opposites, of the infinite and the finite: an infinite collection of finite things, with no boundary, no beginning, and no end.

Bad infinity

Various modern theories have attempted to overcome the absurdity of a “beginning of time” in different ways. First up is the concept that the Big Bang, rather than being a dramatic expansion from a singularity, was a moment of “phase change”, analogous to the way in which liquid water turns to ice. In this theory, the Universe is hypothesised to have been amorphous prior to the phase change, with space and time “crystallising” from this formlessness.

The idea of an evolving and developing Universe is a step forward as compared to the idea of an infinitely steady-state or cyclical, periodically repeating Universe. Both the idea of a steady equilibrium or a cyclic Universe portray a mechanical view of infinity – an idealistic infinity that arises out of the timelessness of abstract equations. All real equilibria in nature are dynamic equilibria involving change, the result of the mutual interactions of matter in motion.

For this very reason, real equilibria and periodic motions in the Universe are not eternal, but are temporary phenomena. Whilst we may see a certain repetition and stability (equilibrium) at all levels in nature, these are only ever phases in the continually and dialectically evolving development of processes. This relationship between stability, repetition and change is expressed by the dialectical law of quantity and quality, which shows how all change in nature, history, and society, takes place through the combination of gradual (and often imperceptible) quantitative changes, which eventually pave the way for qualitative change – tipping points of radical or revolutionary transformation.

In this way, the idea of the Big Bang being simply a “phase change” – representing not the beginning of time, but rather a qualitative turning point in the evolution of the Universe – is a step forward, compared to the idea of a steady-state or cyclical Universe, or a Big Bang that marks the “beginning of time”. However, the “phase change” theory is itself confused, for it talks of time and space as though they are themselves tangible, material things. Space and time, however, rather than being material things in-and-of themselves, are relational expressions between actual material things – relationships of matter in motion.

Matter and motion are inseparable. Motion is the mode of existence of matter. But for matter to have motion, it must change position over a given change in time. Space and time, therefore, are properties of motion in matter, which – like all other properties – express the relationships between things. The concepts of space, time, matter and motion are, therefore, inseparably interlinked. Without space and time, all talk of matter and motion is meaningless. Equally, to talk about space and time, without reference to matter and motion, is to deal with empty abstractions. As Engels comments, “the basic forms of all being are space and time, and being out of time is just as gross an absurdity as being out of space.”[8]

Explanations of the development of the Universe which involve an “amorphous” Universe that “crystallises” to create time and space are therefore empty and abstract also. The Universe is indeed undergoing a continual process of evolution and development – but it is not simply “The Universe” that is evolving; rather it is matter – present in infinite quantities and continuing eternally in all directions – that is continually in motion, producing evolution through the process of mutual interaction. This motion, which has no beginning and no end, gives rise to a dialectical development and evolution in the Universe – to an infinite process of interaction and change, with temporary dynamic equilibria forming, and with similar, but never precisely identical, developments unfolding over time.

Another theory in modern cosmology is the “eternal inflation” theory, which hypothises that our Universe is itself an inflating bubble within another Universe. The theory is based on a mixture of quantum fluctuations and vacuum energy (the energy of empty space), which cause new Universes to be born and grow within old ones. Whilst such a theory avoids the mechanical concept of infinity posed by the steady-state or cyclical Universe theories, the idea that new Universes can be created from bubbles of inflating vacuums is equally absurd and undialectical. The theory brings us again to the most obvious question regarding inflation: where does the energy for each new Universe come from? An article in New Scientist magazine explains:

“Inflation, a theory that [Alex] Vilenkin helped to create, starts with a vacuum in an unusually high energy state and with a negative pressure. Together these give the vacuum repulsive gravity that pushes things apart rather than draws them together. This inflates the vacuum, making it more repulsive, which causes it to inflate even faster.

“But the inflationary vacuum is quantum in nature, which makes it unstable. All over it, and at random, bits decay into a normal, everyday vacuum. Imagine the vacuum as a vast ocean of boiling water, with bubbles forming and expanding across its length and breadth. The energy of the inflationary vacuum has to go somewhere and it goes into creating matter and heating it to a ferocious temperature inside each bubble. It goes into creating big bangs. Our Universe is inside one such bubble that appeared in a big bang 13.7 billion years ago.”[9]

We are told, it seems, that the energy for new universes is created from the energy of empty space, which increases as the vacuum bubbles expand. But again, energy cannot be created (or destroyed). You cannot create something from nothing. There is no such thing as a cosmological free lunch. The eternal inflationary theory, therefore, far from resolving the problem of the “beginning of time” that the traditional Big Bang model leads to, simply reintroduces the same problem in another form.

Alongside the concept of “universes within universes”, there are similar theories involving multiple or parallel universes, existing simultaneously as part of a “multiverse”. One such multiverse theory is that of the “branes”, as mentioned earlier, which collide against one-another in a higher-dimensional space. Another multiverse theory – born out of quantum mechanics – proposes that new universes are created in every quantum event. This “many worlds” interpretation hypothesises that, rather than there being one single real universe, with one actual history of events and processes, all possible alternative histories are real, existing in a (possibly infinite) number of parallel universes.

These “universes within universes” and “multiverse” ideas have led to all kinds of speculation and conjecture, much of which has a greater resemblance to science fiction than genuine scientific investigation. Most importantly, it should be stressed that there is no evidence – and never can be any evidence – for such theories.

All talk of infinity in relation to the Universe – whether it is in terms of a universe that is infinite in time and space, or of an infinite series of universes, or of an infinite number of parallel universes – inevitably provokes discussion on the concept of infinity itself. This, in turn, allows imaginations to run wild: if we have an infinite Universe, or an infinite number of universes, then surely anything that could happen – no matter how small the probability – would happen, and indeed must have happened already?!

This hypothesis is based on the formal mathematical logic that when an infinitesimal probability meets an infinite number of events, the outcome must be a definite or real event. For example, an infinite number of monkeys tapping away on an infinite number of typewriters, so we are told, will eventually produce the entire works of Shakespeare. But such logic is an empty abstraction that is completely divorced from reality. There is a qualitative difference between an abstract possibility and a concrete possibility, between a possibility and a probability, and between a probability and an inevitability. What is theoretically possible is not necessarily probable; and what is probable is not always actual.

The real world – both in nature and in history – is not a series of random probabilistic events. Rather, we instead see processes, dialectically evolving due to internal contradictions; processes that develop with their own inner dynamics and logic. Out of the seemingly chaotic motion and interaction in the Universe arises a certain predictability: similar conditions produce similar results; patterns emerge; tendencies and generalised laws develop.

Take, for example, the process of evolution in the biological world. It is clear to modern science that human beings are neither the creation of intelligent design, not merely the product of random events. Instead, all evolution is a process of dialectical development – of quantity transforming into quality, and back into quantity. Of course, accident plays a role in this – the possibility of random variations and mixes in individual genes is an essential mechanism in evolution. But these “accidents” will only ever have the power to shape an entire population when they express a necessity – when that specific genetic mix provides an advantage to an organism within its given environment.

Similarly, the works of Shakespeare, or of any writer or artist, can never be the product of accident and random events. Accidental events play a role in shaping an individual, but great works of art and literature are the products of an entire history of cultural development. The works of Shakespeare would be inconceivable without the prior literature of the Hellenic or Classical epics and tragedies.

We see, therefore, how such theories, in trying to avoid the absurdity of a “beginning of time”, only end up creating new absurdities. Such attempts to overcome the contradiction of infinity instead end up displaying variations of “bad infinity” grouped at opposite poles: at the one end we see the mechanical concept of infinity – the idealistic infinity of stasis or cyclical repetition; at the other end we see the chaotic idea of infinity – an infinity composed of purely random events, with no potential for development, evolution, or process.

In contrast to these examples of “bad infinity”, which exist merely as abstractions in the minds of theoretical physicists, we can see every day what real infinity looks like by looking out of the window at the weather: a system that is dynamic and chaotic, but which can also be explained and predicted within limits; a case of matter in motion in which no two days are ever identical, but nevertheless one in which there are material limits and general tendencies that give rise to a certain degree of repetition and similarity.

Real infinity is neither purely mechanical nor completely random, but is a dialectical unity of opposites: of the new and the old, novelty and repetition, chaos and order, random and deterministic, accident and necessity. Such an infinity is an evolutionary process of development and dialectical change, with patterns and tendencies, but without exact repetition; a process of quantity transforming into quality, and back into quantity. Real infinity in the Universe, therefore, is not an infinity of The Universe, but the infinity of matter in motion – an infinity of finite things, with no beginning and no end.

The idealism of quantum mechanics

Quantum mechanics whilst consistently being validated by experiments still raises fundamental questions in terms of the interpretation of its results. The dominant paradigm in the field of quantum mechanics is that of the Copenhagen School, named after the Danish physicist, Niels Bohr, who founded this interpretation. According to this paradigm, the state of a particle is not an objective reality, but merely a probability, expressed in terms of a wave function. The “wave function”, however, is no more than a mathematical construct – an equation; an abstract model created by human mathematicians, but promoted to become the basis of all reality by the Copenhagen School of philosophy.

This probabilistic interpretation of the behaviour of atomic and sub-atomic particles means that uncertainty is inherent within any quantum system; the old predictability provided by Newtonian mechanics is lost, and so with it, all sense of causality and law in nature. Given the small scale and high speeds involved at the quantum level, a degree of probability and uncertainty is indeed inevitable. However, the Copenhagen School takes things to an extreme and denies the existence of objective reality, law, and causality altogether.

With this interpretation of quantum mechanics, therefore, we are back to the idealism of the philosophy of Kant, of the “unknowable” thing-in-itself; to the idea of a reality whose true objectivity will always be a mystery to us. This runs counter to the very fundamentals of the science which is based on a materialist method and which asserts that there is lawfulness in nature. Through the process of scientific experimentation and investigation, the inner workings of the Universe can be known to us. This materialist philosophy, which is at the root of both the scientific method and of Marxism, explains that there is no such thing as the “unknowable”, but simply that which is currently unknown.

Even more than that, Bohr and his followers claimed that the properties of a particle are simultaneously all values – in a state of “superposition” – until the point of measurement, at which time the wave function of a particle is said to “collapse” to a single state. This interpretation ultimately leads to a form of subjective idealism, in which there is no objective reality other than that which we observe – a modern day form of the philosophical question: if a tree falls in the woods and there is nobody around to hear it, does it still make a sound?

The obvious conundrum is: according to the Copenhagen interpretation, at what point in the “act of observation” do the quantum probabilities of the wave function become a reality? At what point does the subjective act of measurement become an objective fact? At the time, the Austrian physicist Erwin Schrödinger mocked the Copenhagen interpretation, devising the famous thought experiment of “Schrödinger’s cat” to show the absurdity of Bohr’s view, which would lead to the result that a cat, placed in a box along with a certain setup of radioactive equipment, could be said to be both dead and alive at the same time until the point of observation!

The implications of the Copenhagen School are that certain experiments involving quantum systems yield results that are seemingly supernatural. Specifically there is the phenomenon of “entanglement”, in which two particles can be “entangled”, in such a way that a property of one particle is always the opposite of the equivalent property of its partner. The two particles are initially in a state of superposition, in which the properties of neither particle are known, but when the property of one particle is observed and the wave function collapses, the property of the second particle can be inferred by the knowledge of the first. The result is that two quantum particles, separated at large distances, seem to be able to communicate information instantaneously to one-another, thus breaking the ultimate limit of the speed of light. This phenomenon has yet to be explained by current quantum theories.

Of course, in measuring any aspect of nature, we are forced to interact with the system under observation, and in doing so we have an effect on the properties of the system itself. We cannot place ourselves outside of nature in order to observe it. Scientific experimentation is interactive, in essence a process of applying labour to nature in order to understand its internal relationships, inner causality, and interconnections. But to deny the objectivity of reality and to claim that there is no reality until observation occurs, is to retreat into subjective idealism, into solipsism. It is a retreat ultimately into mysticism, back into the divine realm of the “unknowable” reality beyond the reach of science and thus beyond the possibilities of human understanding also. “God”, as we are repeatedly told, “moves in mysterious ways”.

The materialist method of science, and of Marxism also, is based on the fundamental principle that there is an objective reality, which exists independently of human observation but which we can be known to us. As Lenin explained, when ridiculing the subjectivists, who argued that the world ceased to exist outside of the minds of humankind,

“Things exist independently of our consciousness, independently of our perceptions, outside of us…”[10]

“…[T]he existence of the thing reflected independent of the reflector (the independence of the external world from the mind) is a fundamental tenet of materialism. The assertion made by science that the earth existed prior to man is an objective truth.”[11]

What is mass?

The SMPP also leaves scientists scratching their heads in confusion. The main cause of the confusion is the complete lack of rhyme or reason behind the various particles that have been postulated. No pattern can be discerned that explains the complex variety of matter that exists.

There is also the particular question of mass. Why do particles have the masses that they do? What causes mass in the first place? And just what is “mass” in the first place?

In general physical terms, mass is a property of all matter. Newtonian physics explains mass in terms of inertia – the resistance of matter to acceleration, that is, a resistance to changes in motion. In the Newtonian paradigm, changes in motion are explained by the concept of “force” – something conceived of as being purely external to the object in question.

This one-sided view reflects the mechanical view of the whole Newtonian paradigm. A dialectical view, by contrast, sees the interconnectivity and two-sidedness of any process, including the change in motion of matter. As Engels explains in his great unfinished work, The Dialectics of Nature,

“All natural processes are two-sided, they are based on the relation of at least two operative parts, action and reaction. The notion of force, however, owing to its origin from the action of the human organism on the external world, and further terrestrial mechanics, implies that only one part is active, operative, the other part being passive, receptive…The reaction of the second part, on which the force works, appears at most as a passive reaction, as a resistance. Now this mode of conception is permissible in a number of fields even outside pure mechanics, namely, where it is a matter of the simple transference of motion and its quantitative calculation. But already in the more complicated physical processes it is no longer adequate…”[12]

Mass, as a resistance to changes in motion, can therefore only be defined as something relational between objects, in other words, in terms of an interaction between matter.

Furthermore, in the mechanical interaction of two objects, momentum, defined as mass multiplied by velocity (mv), is always conserved. The kinetic energy of matter in motion is given by the formula ½mv², whilst Einstein, with his famous equation E = mc², showed the equivalence of mass and energy. It is a well-known fact of nature that mass and energy must also always be conserved in any process. All of this further shows how mass can only properly be conceived of as a property of matter; a property that arises out of the relationships – i.e. the interactions – of matter in motion.

One of the great advances of the SMPP is that mass is no longer conceived of as being something inherent to an object. A mechanical and idealistic view of nature sees the properties of things as being something inherent and intrinsic. Thus it can be seen how in ancient times, the temperature (i.e. hotness) of an object was considered to be a result of it possessing a certain amount of the fire element. Likewise, in the seventeenth century, a substance called phlogiston was considered to give matter the property of combustibility. The colour of an object was once considered to be an inherent property of that object, so that red things are red because they have the property of redness. Or from the subjectivist viewpoint, colour is simply due to our individual sense perception. In the sphere of social sciences, the capitalists talk of “human nature”, as an innate selfishness of all people, to justify their exploitative system of greed. Meanwhile, the value of any commodity, according to bourgeois economic theory of “marginal utility”, is simply the result of the subjective preferences of abstract individuals.

Dialectical philosophy, backed up by all of the discoveries of modern science, demonstrates, in contrast, how the properties of things are always truly an expression of relationships between things. Properties emerge from the interactions between things. For example, thanks to modern theories of thermodynamics, we know that the temperature of an object is an expression of the motion of atoms and molecules, whilst combustion today is known as a chemical interaction between a fuel and an oxidant. The property of colour is now known to arise out of the interaction between light (photons) and the electrons in the atoms of an object. These absorb photons of certain frequencies (energy values in quantum terms) and emit photons at certain other specific frequencies which are in turn detected by receptor cells in the eyes and turned into electric nerve signals to be interpreted by the brain.

The Marxists, that is, dialectical and materialist view of history and the economy, explains how human behaviour is a product of society and the mode of production, whilst the value of a commodity is an expression of a social relation, which can only be determined through the act of exchange.

In the SMPP, the mass of any particle is no longer inherent or intrinsic, but is described in terms of the interaction between the particle and the “Higgs field”, via a carrying particle known as the “Higgs boson”. Whilst this conception of mass as an interactive property of matter is a step forward, the use of the Higgs field and the Higgs boson to explain this in reality explains nothing. What creates the Higgs field? How does the three-way interaction between the Higgs field, the Higgs boson and other particles give rise to the property of mass? And why does this interaction provide particles with the values of the masses that we observe? Furthermore, there is the less well-reported problem that the Higgs theory only accounts for a fraction of the mass of matter.

As with the examples of the “inflaton” particle in relation to inflation, the “graviton” particle and the force of gravity, or “WIMPs” and the question of dark matter, in resorting to terms such as “field” and “boson”, scientists have simply applied labels and invented new hypothetical particles to account for unexplained phenomena. A real understanding of mass as an emergent property must arise, however, not out of mysterious fields and “God particles”, but from studying the way matter interacts with other matter. This is the only truly materialist – that is, scientific – way of explaining any phenomena in nature.

“Fundamental” particles

The question of why various particles have the mass they do arises from the same confusion. But there are also others sides to this issue and other questions that have not been asked. Why should the masses of the various “fundamental” particles have a nice pattern to them? Why should nature always display “beauty” in its arrangements? And why do we consider these “fundamental” particles to be “fundamental” at all?

The first two questions highlight one of the main problems in modern cosmology – the tremendous philosophical idealism that has crept in, whereby theories are considered right or wrong on the basis of mathematical aesthetics. Our models and theories are always and everywhere only an approximation of a Universe that is infinitely complex in every way. In many cases, at certain scales, simplicity arises out of complexity – and vice-versa. Thus the complexity of nature can sometimes be expressed by relatively simple equations. But to assume and seek out simplicity in nature is to turn the whole problem on its head. Our ideas, mathematical, scientific or otherwise, are a reflection of the world around us, not other way around. This is the fundamental basis of materialism in science and Marxism. Whether ideas, theories, or equations are “beautiful” or not, their usefulness is contingent upon how accurately they reflect material reality and allow us to gain a deeper understanding of the workings of nature. To assert that nature must conform to our subjective idea of beauty is pure idealism.

The third question concerning the “fundamentality” of the particles in the SMPP is related to the first two. Where we expect to see patterns in nature as a result of our theories— which are the generalisations of our past observations—but instead see inexplicable randomness, this suggests that a deeper understanding of the causal relationships and interconnections of the phenomena is necessary.

The so-called “uncertainty principle” in quantum mechanics is a case in point. We observe phenomena at the atomic and sub-atomic levels that are seemingly random and which the current theories cannot explain. But rather than delving deeper in order to reveal the real inner connections within these phenomena, the advocates of the Copenhagen School simply erect a barrier of mysticism and declare the inner workings at the quantum scale to be “unknowable”. This is counter to the whole history and method of science, the task of which has always been to find explanations for what was previously thought inexplicable, to provide predictability where there was once uncertainty and to uncover laws where before we saw only randomness.

Patterns and even generalised laws can emerge from seemingly random, chaotic, nonlinear, and unpredictable processes. For example, to model and predict the movement of every particle in a cylinder of gas would be impossible. But due to the interaction of many billions of gas particles, definite laws of thermodynamics arise that relate the pressure, temperature and volume of the gas. Patterns emerge from randomness; order arises from chaos; predictability is seen within the unpredictable. Even the processes of quantum mechanics, which is one of the few examples of a process that is still considered to be truly random, produce predictable results and patterns, like the famous interference patterns seen in the double-slit experiment.

Over time, through the development and deepening of scientific understanding, statistical relationships that simply describe patterns and phenomena may be replaced by physical models or scientific laws that explain the interconnectedness and causal relationships within these phenomena. Even so-called scientific “laws”, however, are only accurate at certain scales and within certain limits. Such laws are always only an approximation of objective reality and will always contain errors, inaccuracies, and uncertainty to a certain degree.

In the case of the SMPP, the problem is in assuming that we can talk of “fundamental” particles at all. The whole history of the science of physics has been to continually reduce and shrink what we consider to be the “fundamental” building blocks of nature. First there was the concept of the atom – originally hypothesised by the ancient Greek philosopher Democritus (the word “atom” derives from the Greek for “indivisible”). Later came the discoveries by Rutherford, who came up with an atomic model consisting of electrons, protons, and neutrons. Further research led to the discovery of quarks, which make up protons and neutrons. Who is to suggest that quarks now represent the limit of scientific discovery in terms of particle physics?

The difficulty for many scientists lies in the answer to this question. If we assume that quarks are composed of even smaller particles, then what are these smaller particles composed of? And so on, and so on, ad infinitum. But that is precisely the point – one can always divide further. Just as there is no such thing as an indivisible “smallest” number, so there is no “fundamental” particle in nature.

A century ago, the main chemical and physical differences between all one hundred or so elements was discovered to be based on a relatively simple underlying pattern that involved different combinations of no more than three “fundamental” sub-atomic particles. This discovery marked an important step forward in understanding the structure of matter. Now, the variety of sub-atomic particles postulated runs into dozens and science is no further forward in understanding any underlying pattern.

The solution to the question of so-called “fundamental” particles lies in an infinite series of finite objects – an infinite regression of things composed of other things. And it is the interaction between things at each stage of this series that gives rise to the properties that emerge at higher levels.

This is the answer to problems of the SMPP and of quantum mechanics also. Where our current theories cannot explain what we currently see, we must delve deeper in the search for a true, more accurate and complete description of nature; it is a search that has no limit, because of the limitless complexity of the Universe in every direction.

Irreconcilable theories

On top of all these problems with the various cosmological theoretical components described above, there is one issue that bothers scientists in the field above all else: the incompatibility of the different pillars of modern cosmology, and in particular, the irreconcilability of quantum mechanics and the SMPP with general relativity.

Most of the time this incompatibility is not relevant, as quantum mechanics only applies at small, sub-atomic scales, whilst general relativity is used to describe gravity and the effect of large masses on a cosmic scale. Under the SMBBC description of the Big Bang, however, all the matter of the entire Universe was initially said to be concentrated in a single point. Similarly, it is currently hypothesised that a singularity, with a finite amount of matter concentrated in an infinitesimally small point, exists at the centre of every black hole, formed from stars (of a certain mass) collapsing in on themselves under the strength of their own gravity. Such hypotheses pose large problems, as they involve dealing with both a small scale and a large mass – hence the attempts to reconcile these twin pillars of modern cosmology.

The irreconcilability of quantum mechanics and general relativity has many guises. Firstly, there is the way in which each theory treats space and time. In quantum mechanics, space and time are discrete, with a minimum length known as the “Planck length”. In general relativity, space – or more precisely, space-time – is continuous. The “fabric” of space-time is frequently referred to, with the analogy of objects rolling over a rubber sheet often used to explain how the force of gravity arises according to the theory of general relativity.

From a dialectical and materialist perspective, both interpretations of space and time are flawed, in that space and time are not things-in-themselves, but are relational expressions or properties of matter in motion. As discussed earlier in relation to “phase change” theories of the Big Bang, space and time are not tangible, material, physics objects, and cannot, therefore, be considered either continuous or discrete. To talk of “time” and “space” without reference to matter and motion is to talk about empty abstractions, devoid of any real content. Time, space, matter and motion are inseparable.

Secondly, there is the problem of the four “fundamental” forces described by these two theories. At the small scale, the SMPP describes electromagnetism, the strong nuclear force and the weak nuclear force in terms of the interactions between matter via bosons – force carrying particles. At the large scale, the fourth force, gravity, is explained without recourse to any “boson”, but via general relativity, with matter (mass-energy) affecting the curvature of space-time and space-time in turn affecting the motion of matter. Some have posited the existence of a “graviton”, a gravity boson, but its existence is as yet unproven.

The issue with the question of how the four “fundamental” forces arise, and the divergence in how the SMPP and General Relativity explain these forces, is a result of the mechanical and one-sided view of what “force” means in the first place, as discussed earlier. This results from the Newtonian paradigm of the laws of motion, in which each object is analysed in isolation, with the change in motion of any object being due to an external force. This is expressed in physical terms as force being equivalent to change in momentum and it is often simplified to F = ma, where F is force, m is mass and a is acceleration (a change in velocity).

To express changes in motion in such a way, however, is to portray each term of the equation as something tangible, with an external force that acts on an object (with mass) and causes a change in motion (an acceleration). In other words, we resort to a form of idealism, in which the elements of the equation, which are themselves abstractions, are conceived of as real, material things. The reality, however, is matter in motion, in all its complexity, which can never fully be captured by any formula. All laws, equations, and mathematical models are simply useful abstractions of this dynamic and interconnected motion.

Science has unfortunately been stuck with this mechanical Newtonian view ever since, which works its way into every field of investigation. Where there is motion, we assume there is a force responsible for such motion. From the standpoint of dialectical materialism, however, the motion of matter is primary. Any “force” is merely an expression of the relationship between changes in motion when matter mutually interacts. To assign a force to any form of motion we do not understand is simply to cover for our current lack of understanding of the phenomena under investigation. Going one step further by saying that forces are due to “force-carrying particles” is no better. One might as well say that heat is due to hotness. As Engels explains:

“[I]n order to save having to give the real cause of a change brought about by a function of our organism, we substitute a fictitious cause, a so-called force corresponding to the change. Then we carry this convenient method over to the external world also, and so invent as many forces as there are diverse phenomena.”[13]

There is no reason, therefore, why the three “forces” described by the SMPP should be described in the same terms as the “force” of gravity. The SMPP and general relativity are, like all models, only approximations which describe the motion of matter at different scales. But the laws for how matter interacts are not necessarily the same at different scales. At a certain point, quantity transforms into quality and different phenomena, with different laws, emerge.

We have, for example, as described earlier, the laws of thermodynamics which emerge from the multitude of interactions that take place between large numbers of particles and which describe properties such as temperature and pressure, both properties that are meaningless when talking about an individual particle. In other words, we have phenomena in which the whole is more than the sum of its parts.

Similarly, the laws of chemistry, describing interactions at the atomic and molecular level, cannot be used to predict the behaviour of a whole biological organism. Likewise, knowledge of biological laws does not give much insight into the emergent patterns and general laws that can be observed in history, society, and the economy; for this, one requires the materialist method of Marxism.

What is a law?

The same reasoning explains why quantum mechanics and general relativity are incompatible: because both are, like all laws or theories, only approximations that can describe the motion of matter within certain limits. As Engels explains:

“[T]here is absolutely no need to be alarmed at the fact that the stage of knowledge which we have now reached is as little final as all that have preceded it…Truth and error, like all thought-concepts which move in polar opposites, have absolute validity only in an extremely limited field…As soon as we apply the antitheses between truth and error outside of that narrow field which has been referred to above it becomes relative and therefore unserviceable for exact scientific modes of expression; and if we attempt to apply it as absolutely valid outside that field we really find ourselves altogether beaten: both poles of the antithesis become transformed into their opposites, truth becomes error and error truth.[14]”

The role of science is to continually improve our models in order extend their usefulness; to increase our understanding of all forms of motion and to gain a greater accuracy of prediction. Thus was the basis for the leap from Newtonian mechanics to the theories of relativity and quantum mechanics. Newton’s laws are adequate for most day-to-day experiences but cannot explain motion at small scales or at high speeds.

This then, is the infinite regression of scientific progress – a series represented by successive generations of research, which overtime expand our understanding further and come closer to approximately and capturing the infinitely complex nature of the Universe. As Lenin comments:

“Human thought then by its nature is capable of giving, and does give, absolute truth, which is compounded of a sum-total of relative truths. East step in the development of science adds new grains to the sum of absolute truth, but the limits of the truth of each scientific proposition are relative, now expanding, now shrinking with the growth of knowledge.”[15]

Quantum mechanics and general relativity are excellent theories that have been proven to provide accurate predictions within certain limits. To try and unify the two, however, is a futile task, for they are describing motion at different scales, each with its own set of laws that emerge out of the interaction of matter in motion at those scales.

The main reason for the attempts to unify these theories is because of the Big Bang hypothesis – a hypothesis that is itself far from proven. What is more, even if there were such a point when a large mass was concentrated at a small scale, this would necessarily involve new forms of interactions between matter, meaning new emergent laws, which would be different from those described by quantum mechanics, general relativity, or even a formalistic combination of the two. The whole is always more than the sum of its parts.

All talk, therefore, of a “Theory of Everything” is utopian. All theories are relative truths, approximations of the dynamics of motion within limits. Scientific laws in nature, as with any laws in history, the economy, or society, are not cast-iron laws, but are more accurately described as “tendencies”: generalisations arising from the processes of matter in motion, in which similar conditions produce similar results. There is no law that is absolute, universally applicable to all situations at all times and at all scales.

To hypothesise the possibility of such an absolute law is to view the Universe idealistically, to see “laws” as primary and the material world as a secondary reflection of these laws. The dialectical materialist view asserts the opposite: matter in motion is primary and the laws of nature emerge from the interactions of this matter. Such laws are not written into the fabric of the Universe, but are abstracted and generalised by science in order to better understand and manipulate the world around us. There is no “computer programmer in the sky” who has specified and described all the laws of nature in advance, pressing “go” and sitting back to watch motion unfurl according to these laws.

This is the idealistic concept of “laws” that has been inherited from the Newtonian paradigm and its mechanical “pure determinism” of a “clockwork Universe”, in which all future motion can be specified and predicted from a knowledge of initial conditions and the laws at play. The dialectical materialism of Marxism, confirmed by all of modern science, including the ideas of chaos theory, demonstrates how matter in motion is fundamental, our laws being only approximate descriptions of this complex dynamism and mutual interaction.

The only real “Theory of Everything”, therefore, is a theory, derived from the generalised experiences of the concrete process we see in nature, society, and thought, that describes the general laws of all motion; the laws of change itself. Such a theory is explained by the Marxist philosophy of dialectical materialism.

One step forward, two steps back

There are a multitude of contenders to the Theory of Everything throne. These include supersymmetry, string theory, M-theory and a number of others beside. There are many different varieties and flavours within each theory, with new hypotheses, assumptions, and extensions made at every turn when observations and experiments fail to confirm the predictions (if there are even any predictions!) of the original theory.

For example, in supersymmetry, a whole new range of particles is proposed to explain and unify three of the four forces of nature: electromagnetism, the weak nuclear force, and the strong nuclear force. However, experiments using the Large Hadron Collider have so far failed to find any evidence for supersymmetry. Rather than accepting the death of supersymmetry, academics have developed ever more elaborate versions in a desperate attempt to keep the theory (and thus their careers!) alive. It is a great irony that in their search for the “beauty” of mathematical simplicity, the theoretical physicists end up producing ever more unwieldy models containing an ever expanding set of particles and parameters.

Elsewhere we have string theory, which hypothesises that all the elementary particles of the SMPP are in fact variations of a single fundamental vibrating string, with different energies of vibration accounting for the array of particles that we see. The supposed elegance of string theory is that it apparently provides a Theory of Everything that unifies quantum mechanics and general relativity. The downside is that the theory only works if we in fact, despite all our experiences, live in a ten-dimensional Universe consisting of nine spatial dimensions plus time. These extra six spatial dimensions are, so the theory goes, unobservable – and thus untestable – since they are wrapped up and compacted to a miniscule scale.

In fact there are five different string theories which can be subsumed into a single theory, known as M-theory, if we assume an eleven-dimensional Universe. Extending from M-theory is the concept of new mathematical objects, such as the “branes” discussed earlier, which are said to be floating about and colliding into one another, thus causing Big Bang-like events.

If this all sounds rather fanciful, it is because it is. It should be emphasised that no empirical evidence exists for any of these fantastical claims. String theory and M-theory are simply a case of one set of assumptions and conjectures stacked upon another. They are nothing but abstract mathematical models, theoretical toys and academic playthings, which, whilst internally consistent in terms of their mathematics, have neither observations to back them up nor verifiable predictions to support them. Astonishingly, M-theory, whilst widely discussed and researched in the field of theoretical physics and cosmology, is not actually a theory at all. As of yet, nobody has actually formally written down what it would or should look like; it simply exists as the idea for an idea.

Such theories are completely untestable and are closer to the medieval discussions by priests about “how many angels can dance on the head of a pin” than to genuine science in any meaningful sense. Yet despite these severe limitations, such theories are presented to the public as viable scientific ideas by well-known professors such as Brian Greene and other celebrity scientists. It seems that for every step forward taken in the field of cosmology there are two steps back, with countless hours of labour-time and enormous sums of money being wasted in the pursuit of such obscurantist and idealistic flights of fancy.

The cul-de-sac of cosmology

A growing number of scientific researchers and writers have become exasperated with the current state of affairs and having grown tired of the lack of progress made in the field of cosmology are calling for a radical overhaul. It has become clear to many that after centuries of hard work pulling ourselves up the side of the mountain of discovery, we have been on a plateau of knowledge for some time.

Others are less generous in their description of modern cosmological research, considering it to be little more than a rather expensive and time-wasting journey down a scientific dead-end. Jim Baggott, a well-known science writer and former academic, writes in his book, Farewell to Reality, about the growing gap between modern cosmology and what would typically be called “science”.

In the opinion of Baggott and many others, current cosmological research has increasingly become completely theoretical in nature and is based entirely on the beauty and internal consistency of the descriptive mathematics, with little recourse to actual evidence or observations. Baggott describes such theories, dreamt up in the offices of highly respected universities and institutes, as being nothing more than “fairy-tale physics”, theories that have long ago ceased to play any role in actually improving our understanding the objective reality of the Universe.

“In fairy-tale physics,” Baggot bemoans, “we lose sight of the empirical content, almost completely…If there is one theme underpinning contemporary theoretical physics, it seems to be an innate inability to calculate anything, with the not-so-apologetic caveat: well, it still might be true…

“…The issue…is not metaphysics per se. The issue that in fairy-tale physics the metaphysics is all there is. Until and unless it can predict something that can be tested by reference to empirical facts, concerning quantity or number, it is nothing but sophistry and illusion…

“…At what point do we recognise that the mathematical structures we’re wrestling to come to terms with might actually represent a wrong turn…” [16] (emphasis in the original)

In this view, the field of modern cosmology has become, at best, a fairly harmless form of Keynesianism – a way of employing and funding a few hundred (or thousand) scientists who would otherwise be out of work. At worst, current cosmological research is a colossal waste of scientific resources which, far from being harmless, is actually damaging the wider credibility of science by dressing up nonsense as serious and important theoretical research. As Baggott comments:

“[W]hat does it matter if a few theorists decide that it’s okay to indulge in a little self-delusion? So what if they continue to publish their research papers and their popular science articles and books? So what if they continue to appear in science documentaries, peddling their metaphysical world views as science? What real harm is done?

“I believe that damage is being done to the integrity of the scientific enterprise. The damage isn’t always clearly visible and is certainly not always obvious. Fairly-tale physics is like a slowly creeping yet inexorably dry rot. If we don’t look for it, we won’t notice that the foundations are being undermined until the whole structure comes down on our heads…this stuff clearly isn’t science.”[17]

Time reborn

The crisis in cosmology, however, is causing some within the field to fundamentally challenge the dominant paradigm. Amongst those who are seeking a way out of the current morass is Lee Smolin, a well known academic currently at the Perimeter Institute for Theoretical Physics, who, in his book “Time Reborn”, argues that the whole field of cosmology, from quantum mechanics through to general relativity, is held back by what amounts to the philosophical problem of how science deals with the question of time.

Although not a conscious or consistent dialectical materialist, Smolin correctly highlights many of the fundamental flaws in the method and outlook of current theoretical physics. For Smolin, the problem originates with the Newtonian method of science – a method that, whilst extremely progressive at the time, is now holding back modern physics. The Newtonian method is fundamentally that of mechanics, which examines the movements and motions of isolated systems in terms of particles and forces acting upon them, as Smolin describes:

“The success of scientific theories from Newton through the present day is based on their use of a particular framework of explanation invented by Newton. This framework views nature as consisting of nothing but particles with timeless laws. The properties of the particles, such as their masses and electric charges, never change, and neither do the laws that act on them.”[18]

But Smolin also goes on to highlight one of the limitations of such a method involving isolated systems (or “physics in a box” as he frequently refers to it): the fact that, in reality, one can never truly isolate a system, for there is always a dialectical interconnectivity between matter in motion:

“This framework is ideally suited to describe small parts of the Universe, but it falls apart when we attempt to apply it to the Universe as a whole…

“When we do cosmology, we confront a novel circumstance: It is impossible to get outside the system we’re studying when that system is the entire Universe.”[19] (emphasis in the original)

Throughout his book, Smolin explains how physicists ever since Newton have tried to represent the dynamism and change of matter in motion through the use of timeless and absolute mathematical equations and models which are necessarily simplifications and abstractions of infinitely complex processes and which thus lose their applicability when used to analyse the Universe as a whole.

By placing mathematics above reality and forgetting the approximate nature of its models, theoretical physics has come up against seemingly insurmountable barriers in all the key pillars of cosmology: the SMPP, quantum mechanics, general relativity, and the SMBBC. Physicists have led themselves down a blind alley in search of a “beautiful” and “elegant” Theory of Everything. As Smolin notes:

“It remains a great temptation to take a law or principle we can successfully apply to all the world’s subsystems and apply it to the Universe as a whole. To do so is to commit a fallacy…

“The Universe is an entity different in kind from any of its parts. Nor is it simply the sum of its parts…

“What we mean when we call something a ‘law’ is that it applies to many cases; if it applied to just one, it would simply be an observation. But any application of a law to any part of the Universe involves an approximation…because we must neglect all the interactions between that part and the rest of the Universe.”[20]

“All the theories we work with, including the Standard Model of Particle Physics and general relativity, are approximate theories, applying to truncations of nature…

“…This means that the Standard Model of Particle Physics, which agrees with all known experiments so far, must be considered an approximation…It ignores currently unknown phenomena that might appear were we able to probe to shorter distances…

“The missing phenomena could include not only new kinds of elementary particles but also heretofore unknown forces. Or it could turn out that the basic principles of quantum mechanics are wrong and need modification to correctly describe phenomena lurking at shorter lengths and higher energies…

“…physics is a process of constructing better and better approximate theories. As we push our experiments to shorter distances and larger energies, we may discover new phenomena, and if we do, we’ll need a new model to accommodate them.[21]

In short, the fundamental problem with the Newtonian framework is its mechanical and undialectical method, involving external forces and eternal laws that exist outside of time and space in an ideal and absolute world. But such an idealistic view of the “laws of physics” is in contrast to the dialectical reality of nature, as discussed earlier: that the laws of physics are not imposed on matter, but emerge from its interactions, as Smolin himself comments:

“Laws, then, are not imposed on the Universe from outside it. No external entity, whether divine or mathematical, specifies in advance what the laws of nature are to be. Nor do the laws of nature wait, mute, outside of time for the Universe to begin. Rather the laws of nature emerge from inside the Universe and evolve in time with the Universe they describe.”[22]

Here then, without expressing it in such terms, we have a more dialectical – although not fully worked out – view of the Universe being presented by an established and renowned academic, cosmologist and theoretical physicist, a view in contrast to the timeless and absolute Newtonian paradigm, a view that represents a fundamental shift in outlook and method.